Netflix's Big Bang Load Spike: Managing Sudden Cloud Traffic Surge

Study on Load Spikes: How MNCs Handle It Through Technology

Netflix doesn't need an intro in this agile world, but here’s just a brief one for context!

Netflix started its journey from a DVD rental service to a global streaming powerhouse has been nothing short of revolutionary. In its early days, the company disrupted the home entertainment market by offering a more convenient and innovative alternative to traditional video stores. As Netflix grew, so did the need for scalable, flexible infrastructure. Moving from physical DVDs to the cloud marked a pivotal moment, allowing the company to embrace cutting-edge technologies like virtual machines (VMs), containers, and Kubernetes for efficient scaling and management of its platform. This transformation enabled Netflix to handle massive traffic spikes and global events such as the high demand Jake Paul boxing match without skipping a beat, setting new records for streaming traffic and reinforcing Netflix’s position as a tech-driven entertainment leader.

Netflix has an audience of 600 million, and as of Q4 2024, it has around 301.63 million global

Imagine Hotstar as one of Netflix’s competitors, with around 125.3 million subscribers. However, it’s not the only one Netflix competes with a variety of streaming platforms globally, each facing similar challenges when it comes to handling sudden traffic spikes.

Why mention global subscribers here?

Both Netflix and its competitors, like Disney+ Hotstar, Amazon Prime, and others, provide similar types of entertainment, offering millions of catalogs, live events, and title launches. Regardless of the competitor, the goal remains the same, to increase revenue, maximize profits, and scale infrastructure to handle huge user demand. Managing these massive, sudden traffic spikes has been key to Netflix’s success and its ability to stay ahead in a competitive market.

How a Netflix-like company or any business makes a profit:

Companies like Netflix make a profit in two ways:

- Through sales and by increasing their subscriber count.

By optimizing existing solutions with the help of engineering teams to ensure the app runs smoothly. This helps attract more users and ensures they can enjoy uninterrupted streaming with minimal latency. Managing traffic is crucial for Netflix, as it directly impacts the user experience.

Managing traffic spikes is a crucial task for Netflix or any of its competing companies, such as Hotstar. If the DevOps/SRE team succeeds in this, the viewer experience will be highly admirable, and their setup will be resilient

Imagine you're eagerly waiting for the release of a new movie, say One Piece 2025. As soon as the movie title is launched, you rush to log into a platform like Netflix, where it's being released. But suddenly, you're interrupted by buffering. Meanwhile, Hotstar is streaming the same title, and all the users quickly shift from Netflix to Hotstar.

This is crucial for Netflix to handle a large number of customers.

But thanks to their resilient setup and the DevOps and SRE teams, they think ahead of potential problems to handle load spikes using methodologies like prioritized load shedding, predictive automated pre-scaling, and reactive fast auto-scaling.

How does Netflix handle and implement these strategies at scale with AWS?

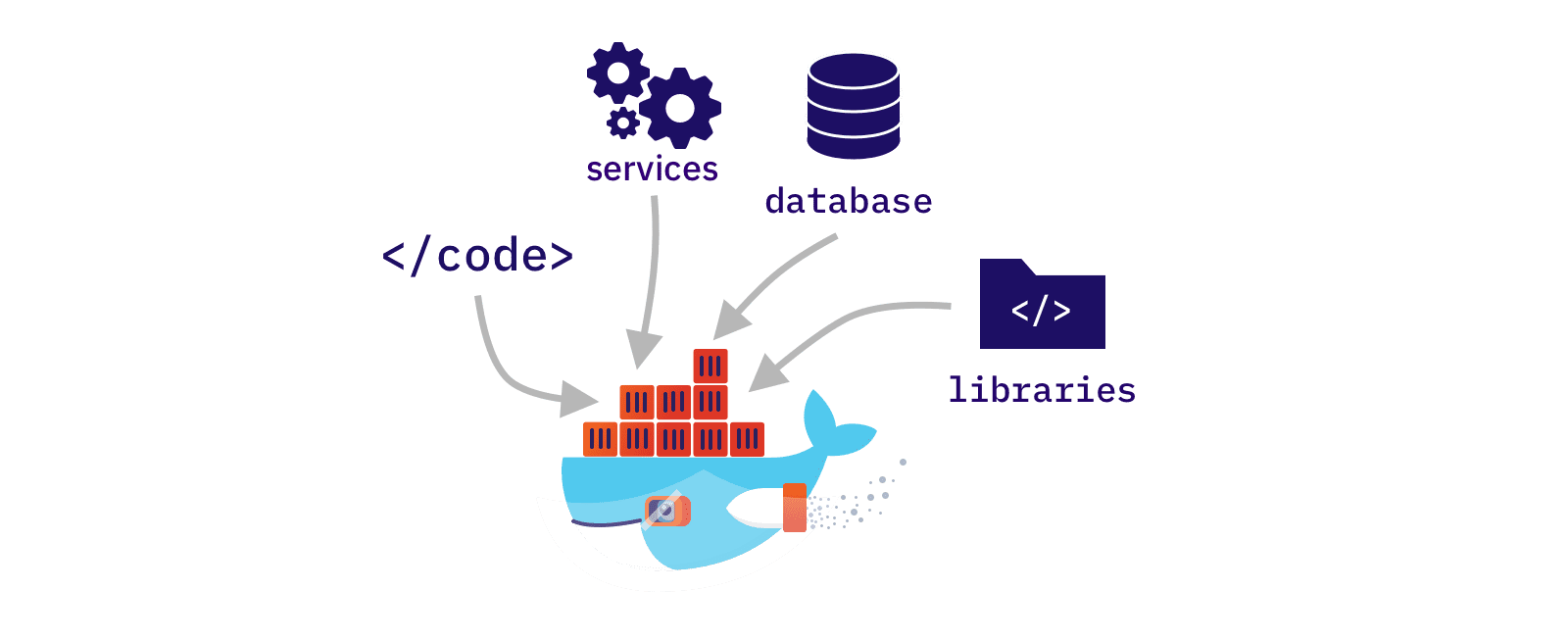

Netflix has been using AWS since its early days, during the transition from physical servers to the cloud. Initially, they were using virtual machine (VM) instances, but they later moved to container technology. Previously, Netflix relied on virtual machines and adopted a microservices architecture for handling all traffic. They also embraced a cloud-native approach. Netflix moved from using over 100,000 VMs to Docker containers, which became a key technology in helping them handle load spikes in the cloud.

Why Containers ?

As mentioned, Netflix was initially using virtual machines (VMs) with a microservices architecture, where traffic was coming from all over the world and routed to data layers. They adopted a cloud-native approach early on and have been in the cloud for a long time. At that time, they didn't have any data centers of their own. Interestingly, they were already using DevOps practices back then.

The DevOps approach helped them scale their application elastically, making it highly resilient. They conducted a lot of testing, including chaos testing every day, by randomly choosing instances to simulate failures. Since they were already doing all these things, why did they suddenly need Docker/container technology?

The reason for this shift was velocity. Developer velocity was slow at that point in time. Developers had to spend a lot of time managing and maintaining infrastructure, which hindered their ability to innovate and focus on creating new features and services.

Mainly ,

Top Priority:

Focused on delivering new features quickly.

Ensured the user experience was smooth, fast, and amazing.

Resiliency:

Always aimed to ensure availability when users needed it.

Focused on high availability and handling failures effectively.

Efficiency:

While Docker is efficient in terms of resource management, Netflix was looking for more than just that.

They needed a solution that could help them manage and scale their systems more effectively, improving overall system performance and flexibility.

Why did they even choose containers? Are you curious?

They had AMIs (Amazon Machine Images), using immutable infrastructure with baking.

But they didn’t have the ability to do local iterative development with the same artifact they were using in production. This was important because it allowed them to work throughout the entire lifecycle of the application. For example, if something happened to the app in production, they could bring the same image used in production back to their local environment and test to determine the cause of the error or issue.

They had a great system for baking AMIs, which was built around the OS concept.

They would create Debian or Ubuntu packages based on the use case, often using Upstart scripts. However, the challenge arose when people tried to run an app along with its dependencies, aiming to simplify the build and artifact process.

While developers understood how instances worked, they needed guidance on which instance type to use. For example, they needed to determine the right instance type, as well as the appropriate RAM and CPU configurations, from a variety of options. To assist with this, they had a separate team of experts who helped them make these decisions.

Netflix came up with a plan and decided to build its own container platform. After shifting to their own container platform, they were able to see significant efficiency gains, especially in running complex infrastructure algorithms at scale. This shift allowed them to reduce processing times from a month down to just a week, outperforming their competitors.

But the next challenge for Netflix began, the cycle of debugging !!

They had a distributed, eventually consistent minor repository. However, they didn’t have a monorepo for their source code.

They had all these microservices, which acted as upstream consumers. When they made changes like altering the build infrastructure, cloud platform libraries, or modifying a microservice that a team of 10 members depends on what happens then?

Typically, when they run tests, everything passes. But when the development team tests it for deployment on the production system, it suddenly fails! So, what do they do now? They have no choice but to start debugging from scratch to identify the issue.

For this reason, they found a solution!

Once they conducted system tests, they were able to run a massive parallel build system. Now, they can automatically perform tests that every other team depends on, all in one go!

Literally, it's like saving 100 hours of debugging for the upstream system.

This also helped them optimize container space for smaller runtime tasks, such as Node.js. Developers who preferred working with Node.js for their edge applications no longer needed to understand the underlying infrastructure. Now, they could focus solely on coding their apps without worrying about packaging, instance types, or required resources. As a result, developers were able to focus on their applications, work faster, and innovate new ideas during their free time.

The best part is that Netflix built its own container platform to manage containers, rather than relying on other available container management solutions. Other CMS platforms were focused on data centers, abstracting the cloud they relied on and working under them, or even supporting multi-cloud architectures. Since Netflix was primarily focused on AWS at the time, they built their platform to accelerate and finely tune Amazon's container solutions.

With their cloud platform already using microservices, they just needed to add the final puzzle piece: a container cluster manager that would seamlessly fit into their existing cloud infrastructure, helping them work faster.

They were mainly focused on one thing, rather than exploring all the other solutions out there with continuous delivery features like service discovery and telemetry systems. What they needed was a container cluster manager, similar to the VM cluster manager they had used earlier at Netflix.

An important aspect was that they weren’t initially prepared to handle such a large number of clients simultaneously. However, by choosing the right technology, they were able to efficiently handle load spikes and scale their application.

Why and Where Did It All Begin?

They were using a batch process system! This process allowed users to focus on their applications, while developers had to define everything related to how a job works. Developers didn't need to worry about things like instances or auto-scaling groups. Instead, they approached another team, such as the Ops team, for these tasks!

They simply ran shared resources until the job or work was completed. Before using their own container management platform, they had been using container technology, though not related to Docker. It was a similar concept.

Meson Stream Processing System – Mantis. They were strictly using Cgroups, the same technology powering Docker and Mesos. They were using all this tech because it helped them earlier on, providing simple isolation. The most important part was that they were very specific about packaging formats.

For example, if you wanted to run a Spark job, you had to deploy the Spark job, shift from Mantis/Meson jobs, and create those specific format packages from scratch. This meant tasks that didn’t fit into other frameworks, making it a tedious process to learn and implement from the ground up!

So, they developed a project called Titus.

Titus is a container execution engine that uses Docker at its core, with a lot of AWS integration. Developers had to manage resources and optimize on behalf of all the jobs coming into Docker.

At the time, it was primarily for batch job management.

In Netflix's world, batch processes are like training an algorithm model that spans multiple languages, filters, merges, and finally converts everything into a Docker container! This container would then run massively at parallel scale on their infrastructure. They were also using GPUs for scenarios like personalized recommendations with deep learning networks. Moreover, they were amazed by AWS services, especially when AWS started offering GPUs on demand! Other use cases: media encoding (raw video), watermarking (time-sensitive PDFs with digital watermarks), and Open Connect (daily reports).

Batch jobs were effective, but they couldn’t handle the agile world of change! Instead of continuing with batch jobs, they started adding services.

Now, you might think services are just like long-running batch jobs, but they’re not!

Services allow them to integrate with AWS and Docker. But services are more complex than batch jobs. Netflix explored services and gradually implemented them. Services are constantly resized and have auto-scaling requirements. Services also have more state now, such as running or not running apps. A service might start, get discovered, and then be able to handle traffic.

As mentioned, Netflix has a partnership with AWS, which offers many features that enhance Netflix's user experience, such as auto-scaling, CloudWatch for high-resolution metrics monitoring, and on-demand compute to handle load spikes and similar scenarios.

Netflix has a global content delivery network (Open Connect) that stores all of its video assets. It is designed to efficiently stream TV shows and movies by bringing content closer to viewers. Launched in 2011, it was developed to address the growing demand for Netflix streaming and improve collaboration with Internet Service Providers (ISPs). The network uses a proactive caching solution, significantly reducing upstream network demand. Open Connect is tailored specifically to Netflix's needs, enhancing performance and scalability.

Netflix primary foot prints are in typically 4 regions

us-west-2 (Oregon)

us-east-2 (Ohio)

us-east-1 (North Virginia)

eu-west-1 (Ireland)

One of the interesting features of Netflix's system design is the use of Kubernetes technology. Kubernetes is a container orchestration tool that helps developers monitor resources 24/7 without human intervention. This is one of the key features used by Netflix. We can say that Netflix is up 24/7, even if no one uses it, as Kubernetes continuously monitors resources.

Kubernetes has a concept called master-worker architecture. If one system goes down, Kubernetes, behind the scenes, is intelligent enough to route traffic to other nodes without the client even noticing.

Additionally, the content from one region is replicated to other regions to avoid issues during a regional failover.

By the way, why do they need an active architecture?

Technically, the system may appear active, but behind the scenes, components may be in a standby or idle state. When a request is made, the necessary function is triggered, and the app gains access to the CDN content. There are several other tools, integrated with Kubernetes, working behind the scenes to ensure that these processes happen in an optimal way.

Netflix chooses an active-active architecture to support region failover. Region failover is a mechanism that helps drain traffic from one region (e.g., us-west-2) and reroute it to another region (e.g., us-east-1) in case of an issue or maintenance. This ensures high availability and minimal downtime. The traffic is seamlessly redirected from us-west-2 to us-east-1, allowing the application to continue functioning without noticeable interruptions

The outcome of this approach is that the user experience remains more or less seamless for the users.

In the past and present, Netflix has experienced huge surges in user traffic. For instance, the Starts per Second (SPS) graph from Netflix shows the number of users trying to log in to the app every second. Netflix uses advanced proxy metrics for overall load balancing across its regions. You can observe in the graph how traffic patterns vary across different regions.

The graph starts from 12 AM, showing that not many users are active at this time. At 12 PM, traffic peaks, which is when most users are most active on Netflix. This pattern repeats every day, 24 hours a day, and continues day after day, month after month.

There is a huge difference between this graph and the traffic during peak times, such as live events or the launch day of a new title.

Another key difference is that peak times vary from zone to zone throughout the day.

It is common to see traffic patterns on Netflix, as most of the time users stream content during peak hours, but there are exceptions. This is where failover mechanisms come into play, such as congestion failover, retry storms, etc., as seen in the picture below.

We are going to discuss how Netflix handles failover and manages huge traffic loads based on the graph above.

To overcome a region failover scenario, Netflix drains all traffic from us-east-1, and other regions take control of the traffic from us-east-1 eventually. Netflix uses region failover for a couple of reasons.

Do you know the reasons? And they are!!

Bad software push: If a bad software push occurs, the team will want to reroute traffic from that region to another

Maybe the region is impaired for some reason or another.

But the most common reason is performance failover, which is often tested to check the resilience of the app setup. The team performs this on purpose.

These steps are core to how Netflix strategically handles the resilient setup of its traffic. They practice testing week by week, month by month, to ensure they can guarantee the site is always up. If this isn’t done, the above graph is simply a test case performed by Netflix, which is pre-planned to ensure smoother business operations. The interesting part is how they intentionally create self-induced load spikes, almost like breaking their own system on purpose. This way, if something goes wrong in the future, they can better understand the potential causes and quickly implement a solution.

But how did they reroute traffic during live events with lots of user requests?

Well, it’s an interesting question. Netflix's development team or algorithm experts need to come up with a plan. With that plan, they must reroute traffic from one region to another as quickly as possible. The goal is to reroute traffic to another region and protect the affected region in less than 2 minutes, or even faster.

Another challenge arises when they restore traffic back to the same region. They do it gradually, but much faster than before. This can lead to an even bigger load spike because when they restore the traffic, it starts from scratch.

In this scenario, traffic will be sitting at zero RPS, with no traffic flowing through it. So, the Netflix SRE team quickly loads the traffic back to its operational state, just as it was working earlier.

There are two types of traffic surges seen by the Netflix team:

Short Surge:

Retry Storm : Servers overwhelmed by repeated retry attempts, leading to service disruption.

Auto Scaling Limits : Even with auto scaling, the system may not be able to fully handle the incoming load.

Client side Bugs : Issues such as outdated versions of apps or devices (e.g., old Android versions) causing failures.

Long Surge:

New Title Launch : A surge occurs when a new title is released, such as the new season of One Piece, Wednesday, Squid Game, or Solo Leveling Season 2. If a title launches at 12 AM, many users will try to access the app and view the video simultaneously, causing a long surge. This surge continues for several hours until users return later.

Live Events : Live events, such as a World Cup broadcast on Netflix, can also cause a long surge. When many users attempt to log in and watch the event live at the same time, the system may reach its capacity limit. For example, if the system can handle only 1,000 users at once, any additional users will experience delays or issues.

Netflix uses thousands of microservices to handle the scale and complexity of its platform. Despite the complexity, Netflix has built sophisticated monitoring, fault tolerance, and orchestration systems like Hystrix, and Chaos Monkey to manage the complicated call graphs and ensure reliability. These systems help maintain the platform's resilience and scalability, even with the challenges of such a distributed architecture.

Microservices are used in Netflix, which means that if Netflix experiences a 2x load spike, imagine that the login page is built using microservices. If a load spike occurs, it could result in different behaviors. It all depends on where, what, and how the service call graph is designed, thanks to the work of the SDE team.

Because of microservices, the result of a load spike varies. One service might experience a 2x load, another might experience only a 1x increase or even less, depending on its functionality. Some services may even see an amplified load spike, resulting in a 4x load spike.

So now the question arises!!

To tackle this, Netflix uses a concept called Buffer.

Success Buffer: It refers to the ability of a service to handle a load spike while still returning a success to the client device. The best part is that the client is often unaware of any degradation and continues using the app as normal

Failure Buffer: Failure buffer serves a different purpose. It helps preserve the health of the system. For instance, if some users are in this buffer, they may experience issues like pages wandering here and there. The system tries to keep the page alive but eventually returns an error message to the user. Once the user exceeds the failure buffer, their service enters a congestive failure mode.

So what is congestive failure mode ?

This is the reason outages happen!!

When users try to access services that are so busy, throttling and rejecting requests that can't move forward, that's when outages occur.

In the above example, we can see that the service has a 2x success buffer, allowing it to handle twice the amount of traffic successfully without the client noticing any difference in their service. On top of this, it can handle a 4x extra spike but will return an error message to the client. This kind of scenario is an okay situation, as it works as the SRE team intended and helps preserve the health of the system.

Netflix's business model is changing every day as it aims to attract audiences worldwide, i.e., global reach. They are doing their best to launch new titles globally. However, there are situations where some content is restricted to certain regions, and the audience for specific content is larger in certain regions while other content gets less attention. To address this, Netflix has a region-wise policy for content, which is applicable to each region.

The goal of Netflix is to

Reduce TTR (Time to Recover): The goal is to minimize the time taken to recover from issues.

Come out of Load Spikes Quickly: The aim is to recover from load spikes as soon as possible and process user traffic efficiently.

Minimize Region Failover: The main goal is to reduce the need for region failover to avoid complications and stay ahead of potential issues.

Stay Ahead of Load Spikes: They aim to anticipate load spikes and always maintain a resilient posture, understanding that load spikes can happen at any time.

Are you curious to know more?

What solutions did Netflix come up with?

When they already know how load spikes look, what measures did they take?

What is the role of Data Scientists, ML Engineers, and SREs in solving these issues?

How did they use insights from historical data and past events?

How did they experiment before load spikes happened again?

What is Netflix’s "Hammer Policy"?

What are the components responsible for detecting Time to Recovery (TTR)?

How do metrics play a vital role in solving this issue?

Conclusion:

In Part 1 of this blog, we explored how Netflix, a global streaming leader, ensures resilience and scalability by addressing key challenges related to load spikes. As the company continues to innovate, its use of advanced technologies such as containers, AWS, and Kubernetes, alongside strong DevOps practices, has empowered them to stay ahead of traffic surges. Their proactive strategies, like load shedding, predictive scaling, and continuous testing, are essential in keeping the user experience seamless, even during high-demand events. Netflix's focus on minimizing downtime, reducing Time to Recovery (TTR), and anticipating load spikes reflects their commitment to providing uninterrupted service to millions of users worldwide.

This is just Part 1 of Netflix's blog on load spikes! We will continue this blog in the next part with answers to all the questions listed above! Stay tuned as we dive deeper into Netflix’s solutions, the roles of data scientists, ML engineers, and SREs, and how insights and metrics play a pivotal role in addressing these challenges.

References:

Numbers of Subscribers: Netflix, Hotstar

Open Connect : Image

Starts Per second Graph: AWS Reinvestment 2024

Thank you for staying and reading till the end! Your valuable feedback is much appreciated.

Do connect with me on social media

LinkedIn : https://www.linkedin.com/in/sathyanarayan-k/